Could you introduce yourself and tell us about your background, how you became interested in IA music and sonification? Could you tell us about your work in generative music, especially in text sonification?

My name is Hannah Davis! My work lives at www.hannahishere.com, musicfromtext.com, and I’m @ahandvanish on Twitter.

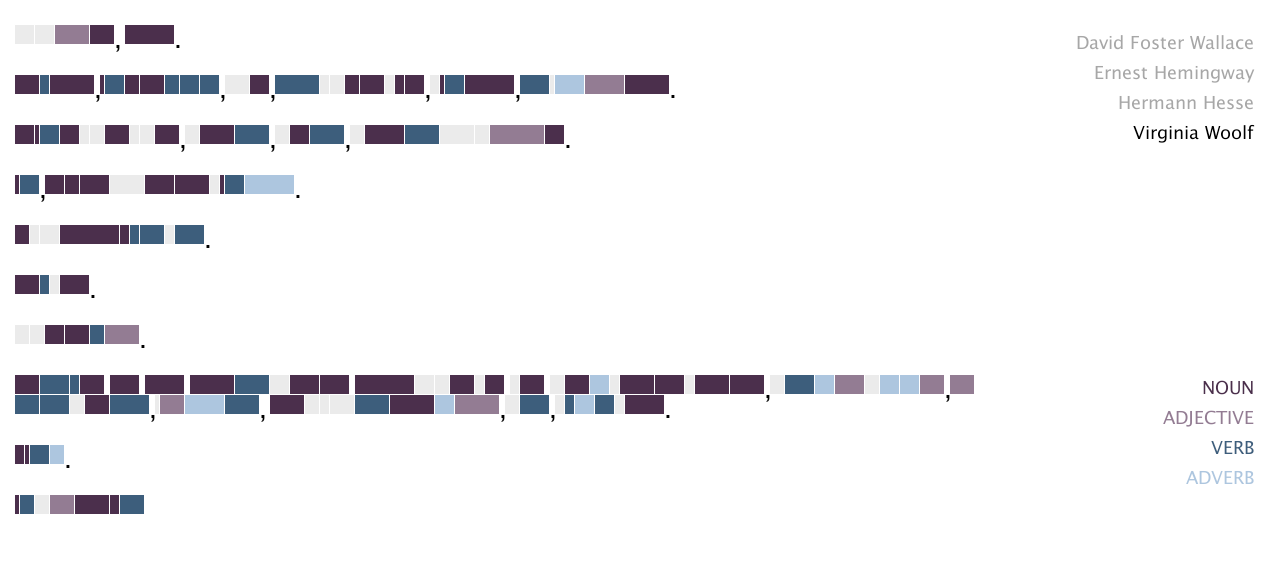

I became interested in sonification during my time at New York University’s ITP program. An algorithm I wrote called TransProse, which turns books into music, was my thesis while I was there. TransProse works by getting ‘emotion counts’ throughout a book, and turns that emotion data into music by different mappings. Some examples of mappings can be that the octave is influenced by the sadness or anger, or the tempo can relate to how ‘active’ the emotions are, or the amount of activity. There are many ways to map music to emotions — it’s very subjective.

For a few years afterwards I worked in data visualization, and then focused on data sonification. I’ve always been interested in music – when I was younger I played in a baroque recorder ensemble, and in my early adult years played a lot of percussion and guitar – and so getting involved with generative music was a natural direction for me.

How would you describe the specificities of data sonification, compared with data visualisation?

Data sonification is like data visualization, but instead of representing data through graphs, you’re representing it through sound. Data sonification has a different set of strengths than data visualization. It’s great for time-based data, for anything multi-dimensional, and for anything that has cycles – it makes it very easy to find (or hear) patterns in the data. It’s really good for small units – my favorite along these lines is a piece Amanda Cox did for the New York Times many years ago. It’s also possible to create interesting generative music with data – I like James Murphy’s set of pieces that use tennis data.

It’s also good for emotional data, because sound and music are such excellent conveyers of emotion.

There is a link between the statistical weight of a word in a language (Zipf’s Law) and esthetical balance between predictibility and unpredictibility, for example in noise 1/f. Is it one of the reasons you ended up with text sonification? Are you interested in this kind of approach?

I am super interested in these ideas, but they are not driving factors in my work. The features I work with tend to me higher-level abstractions, such as emotions.

How do you deal with the question of complexity of emotional interpretation in musical mappings (for example, there is some happy music in minor keys, and some very sad music in major keys)? Do you intend to use machine learning for define more ambiguous parameters in the future?

I generally deal with this type of complexity by creating mappings and features that contain that complexity. One thing I try not to do is truncate the data – i.e., if ‘joy’ is the highest emotion of a text, I don’t make a piece only with the joy emotion, but use ratios of joy to other emotions as well. It is difficult to generalize and always create complex pieces, but my work is very iterative, and I believe it will always get better.

I haven’t used machine learning in my text-to-music program yet, but I have some ideas. It will be interesting to see the output from a machine learning program versus the current outputs.

Would you say that every kind of data set could generate an interesting music, and under what conditions? What would be the perfect data set for sonifying?

Yes, I do believe that! I think it one hundred percent comes down to the person sonifying it, however. I really love working with novels and other chronological text data, because to me, they have natural peaks and valleys, climaxes and conclusions, that help create natural-sounding music.

Could you tell us about your musical twitter bots?

There is a big creative Twitter bot community – bots that write poems, that post beautiful images, that generally bring some serendipity to the day. I created my Twitter bot class to bring more attention to this area of the world, and I created the Twitter audio pipeline in the hopes that people would start creating audio Twitter bots. Most of the bots are offline right now, but I have code online. I made the pipeline because Twitter doesn’t support uploading audio files, so the code here takes a piece of generated music, wraps it with an image, and uploads it to Twitter as a video. The first Twitter audio bot I did took the tweets of @Pentametron, which is a famous Twitter bot, and created snippets of audio that mirrored its tweets.

Could you speak about your collaborations with composers, especially with Mathieu Lamboley on Accenture?

Collaborating with Mathieu was a very short but very fun project. I generated three pieces based on news articles with different themes – i.e. the “rise of technology.” He took those pieces and fleshed it out into a three-part symphony, which was performed at The Louvre in Paris and the BMW Museum in Munich.

In early cinema, a pianist improvised to the images on the screen, following the story of the movie. Likewise, you propose a software that automatically improvise music emotionally related to moving pictures. Could you tell us about this exciting project?

This project is in the very beginning stages! Because of my work creating music from text, I believe it is possible to create music from video as well. The composition process would be very different – instead of creating a minute-long piece that represents the work, as I did with the novels, this would be music that would play alongside the video. The constraints and objective would be to augment the film, not to represent it, necessarily. I’m applying for some grants to prototype it, so we’ll see….

There is a large field of on-going uses for AI composed music (music for Youtube contents, video games…). How do you see the future of this?

It’s a mixed bag! I’m very uninterested in generic all-purpose music, especially where it puts musicians out of work, and I hope it doesn’t go in this direction. On the other hand, I think there is a lot of room for programmer-composers to come up with unique and interesting film and game music. I’d like to see the focus be on humans using AI to create new types of compositions, and less on humans writing programs to create never-ending music that sounds the same.

Have you any special challenges on this subject?

The main challenge to me is that, since this is such a new field in a way, the software and tools to create music from data and other information don’t really exist yet. This makes it hard to compose, to teach, and to allow non-programming musicians to take advantage of these new ideas. It would be very cool to see more of these types of software going forward.

(March 2018)